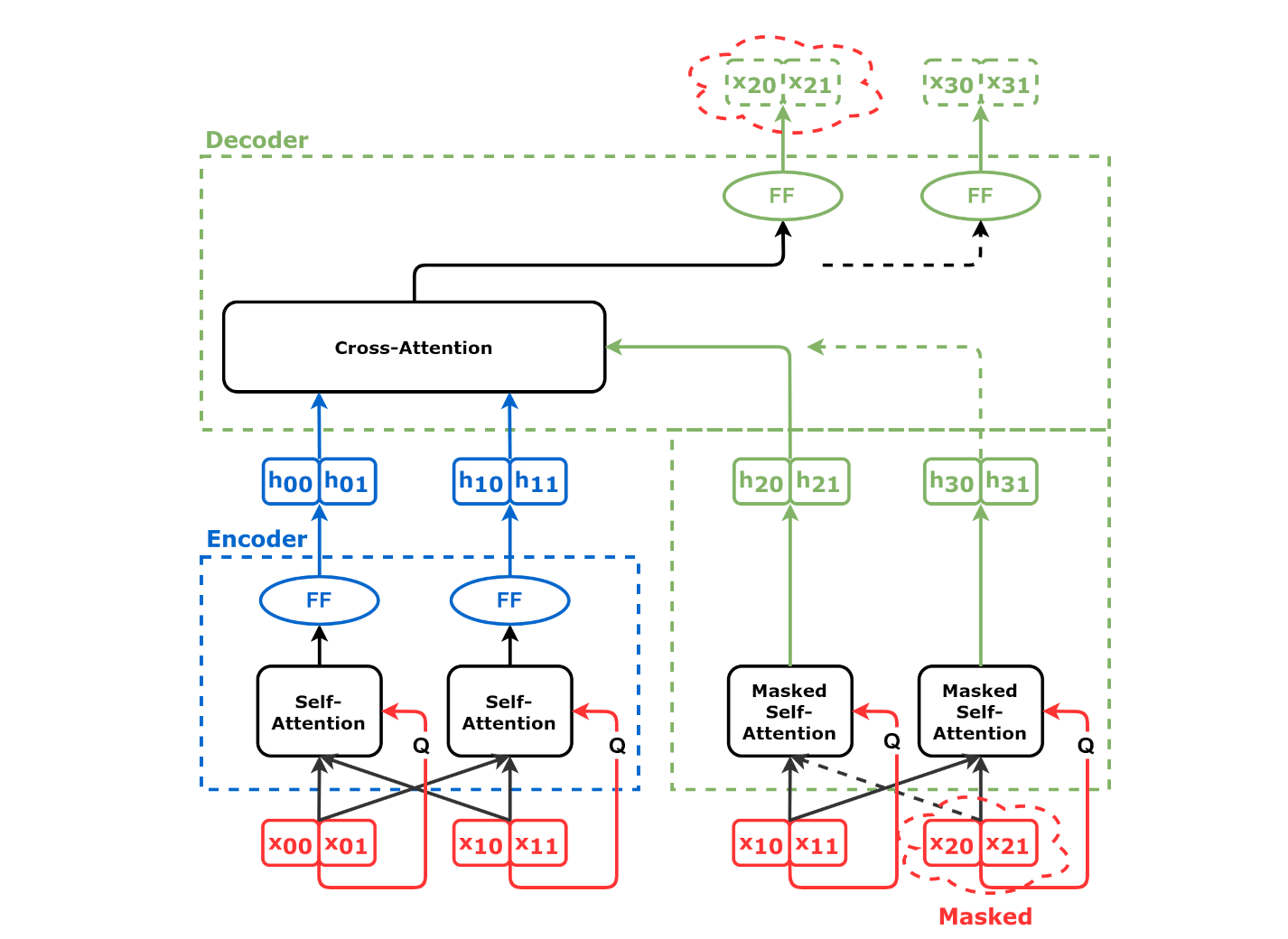

Sequence to Sequence (Seq2Seq)

These images were originally published in the book “Deep Learning with PyTorch Step-by-Step: A Beginner’s Guide”.

They are also available at the book’s official repository: https://github.com/dvgodoy/PyTorchStepByStep.

Index

** CLICK ON THE IMAGES FOR FULL SIZE **

Papers

- Encoder-Decoder Architecture: Sequence to Sequence Learning with Neural Networks by Sutskever, I. et al. (2014)

- Self-Attention / Transformer: Attention Is All You Need by Vaswani, A. et al. (2017)

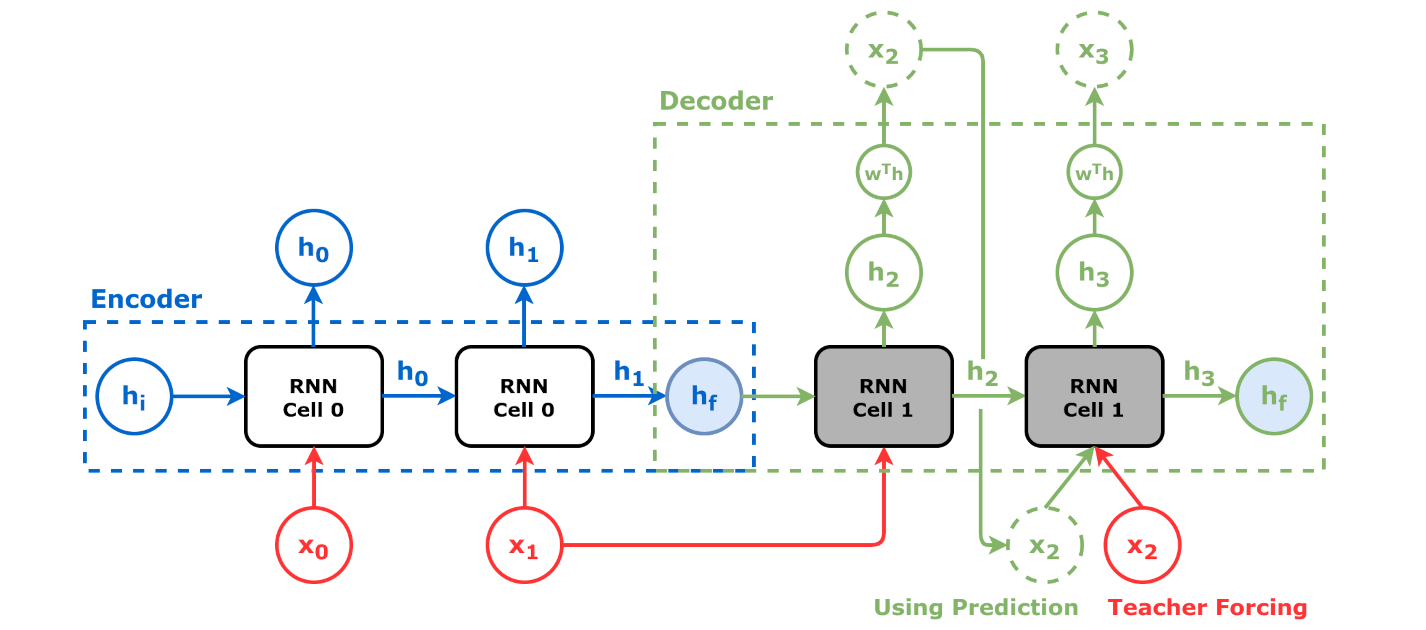

RNN

Source: Chapter 9

Source: Chapter 9

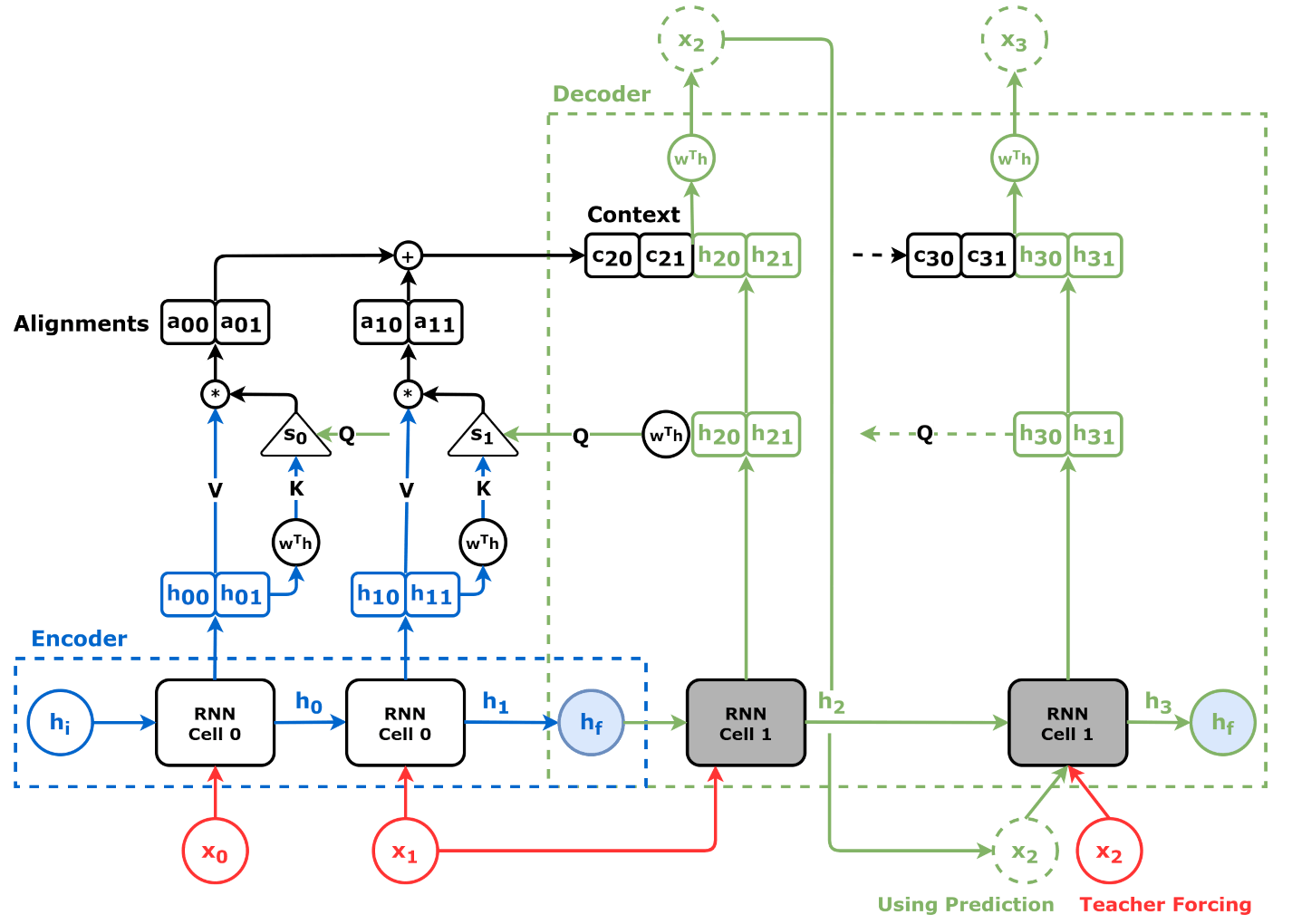

Attention

Source: Chapter 9

Source: Chapter 9

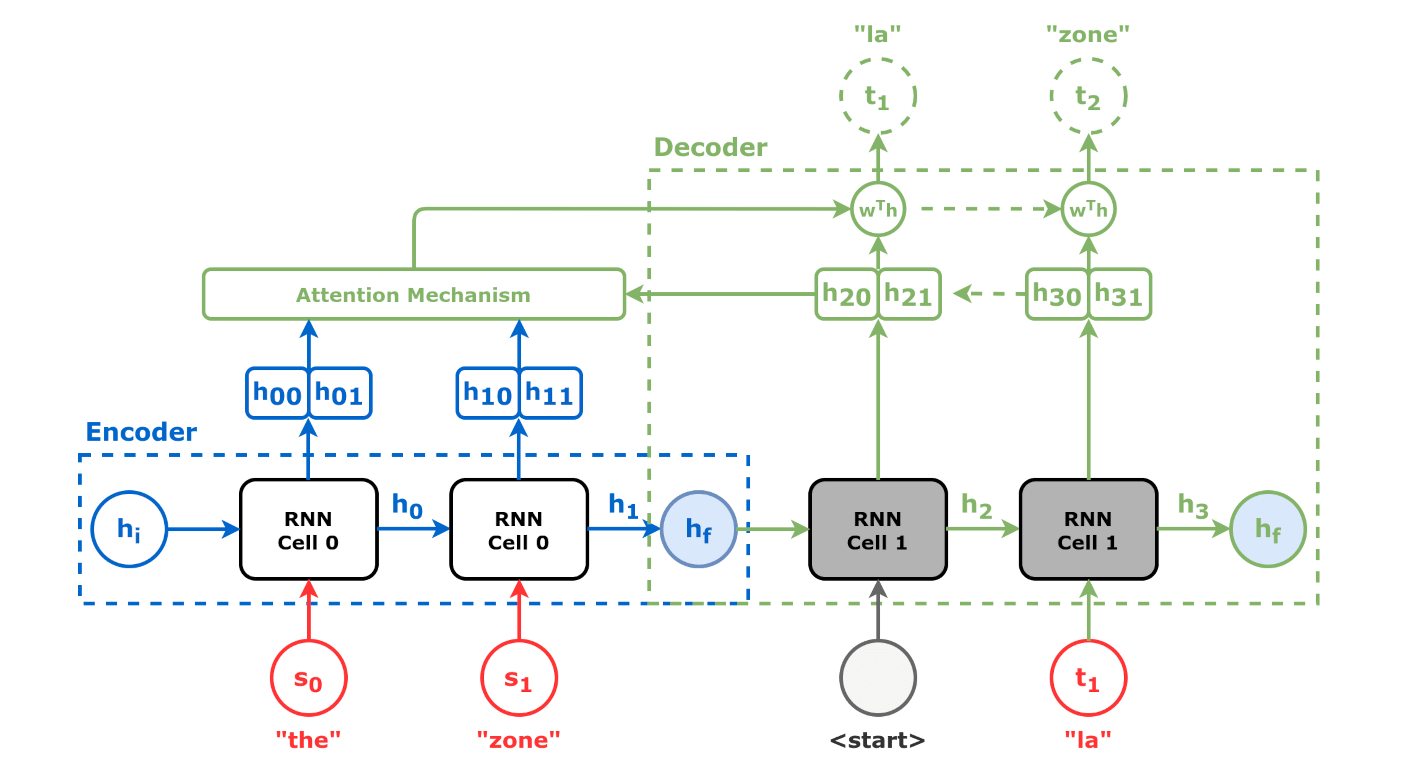

Source: Chapter 9

Source: Chapter 9

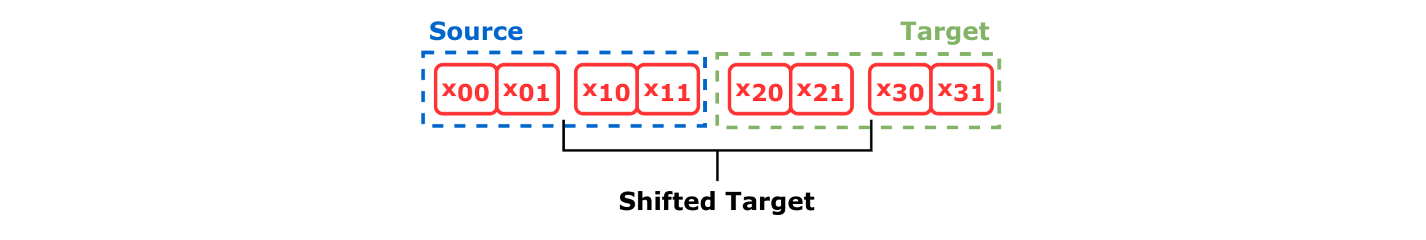

Self-Attention

Source: Chapter 9

Source: Chapter 9

Source: Chapter 9

Source: Chapter 9

This work is licensed under a Creative Commons Attribution 4.0 International License.