Activation Functions

These images were originally published in the book “Deep Learning with PyTorch Step-by-Step: A Beginner’s Guide”.

They are also available at the book’s official repository: https://github.com/dvgodoy/PyTorchStepByStep.

Index

** CLICK ON THE IMAGES FOR FULL SIZE **

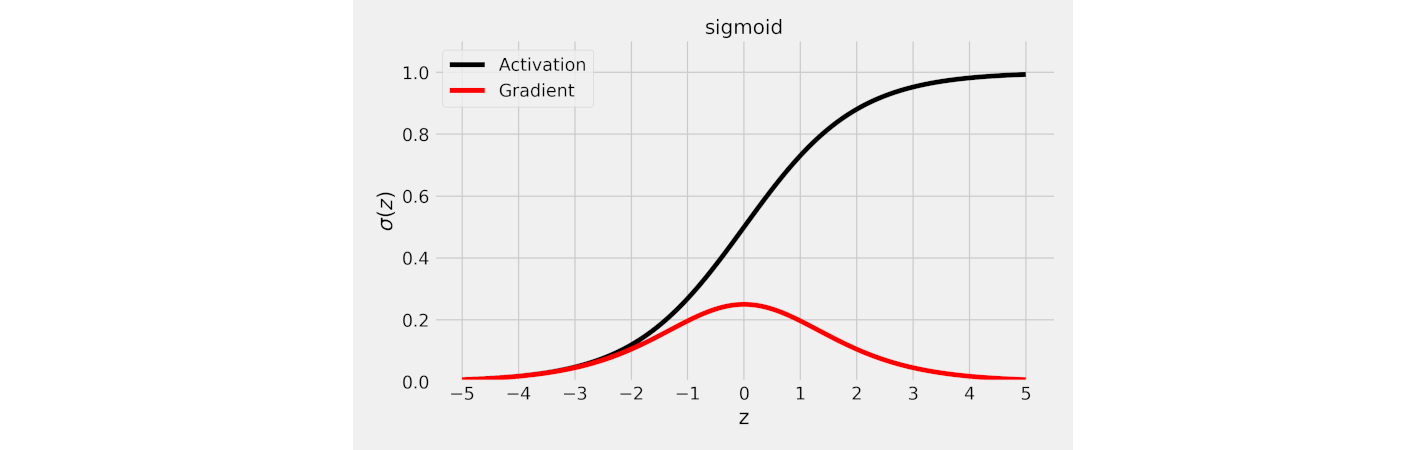

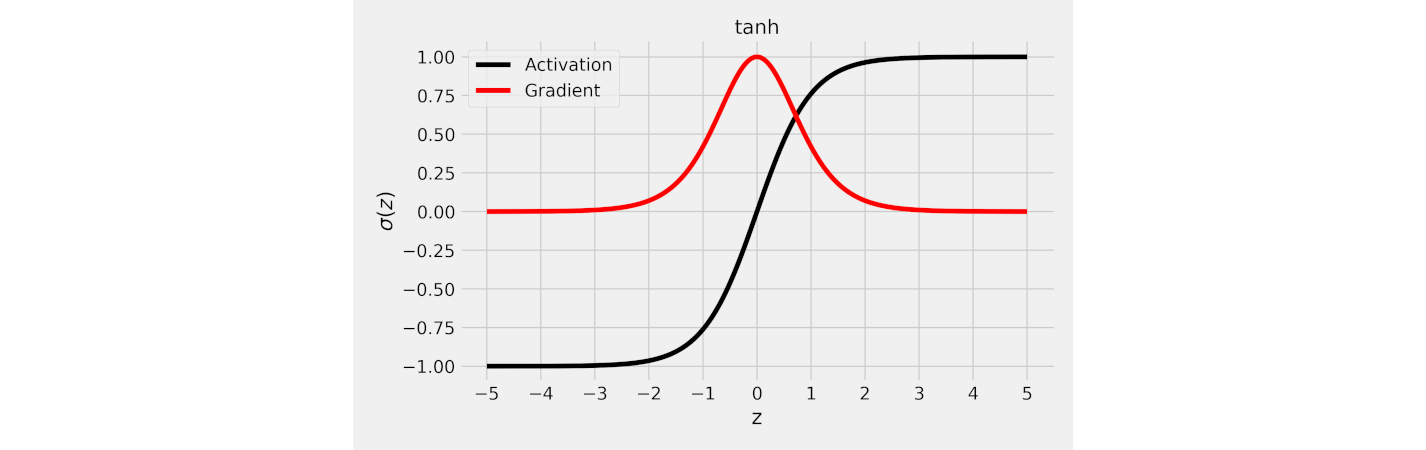

Functions

Sigmoid

Source: Chapter 4

Source: Chapter 4

Tanh

Source: Chapter 4

Source: Chapter 4

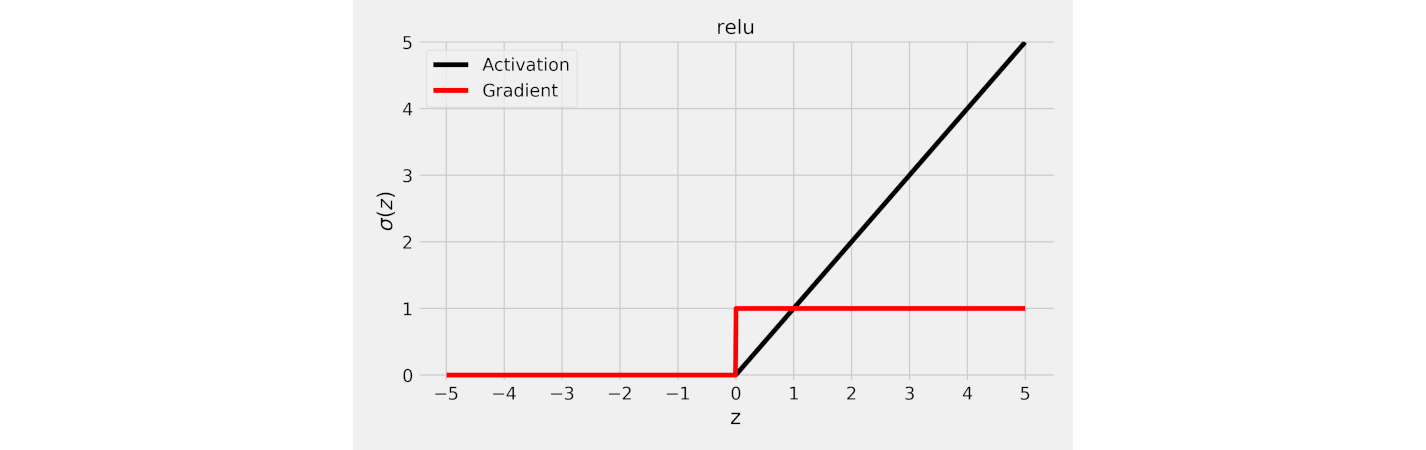

ReLU

Source: Chapter 4

Source: Chapter 4

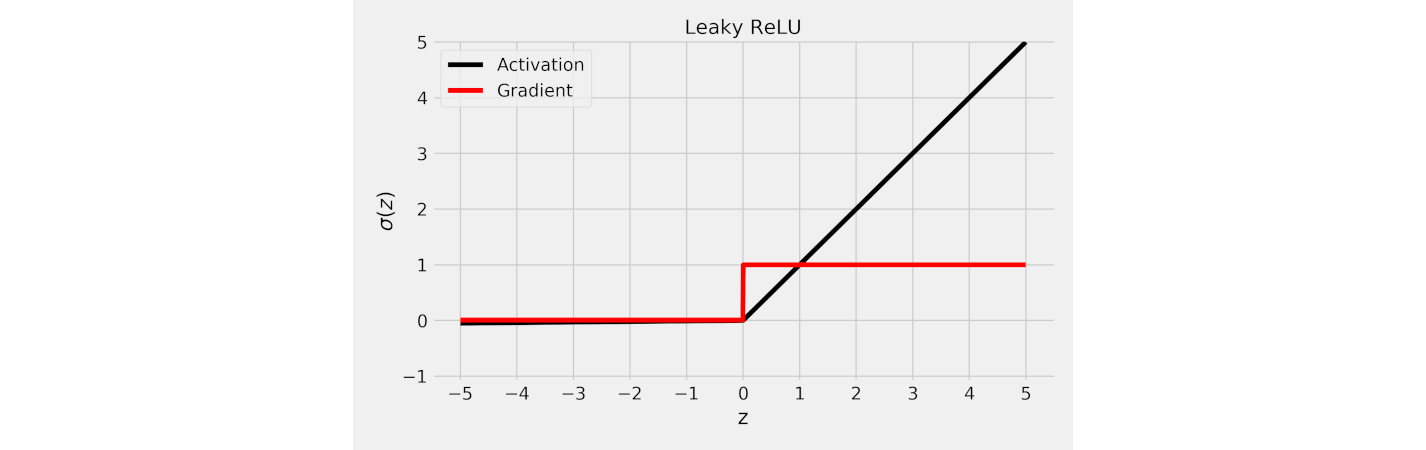

Leaky ReLU

Source: Chapter 4

Source: Chapter 4

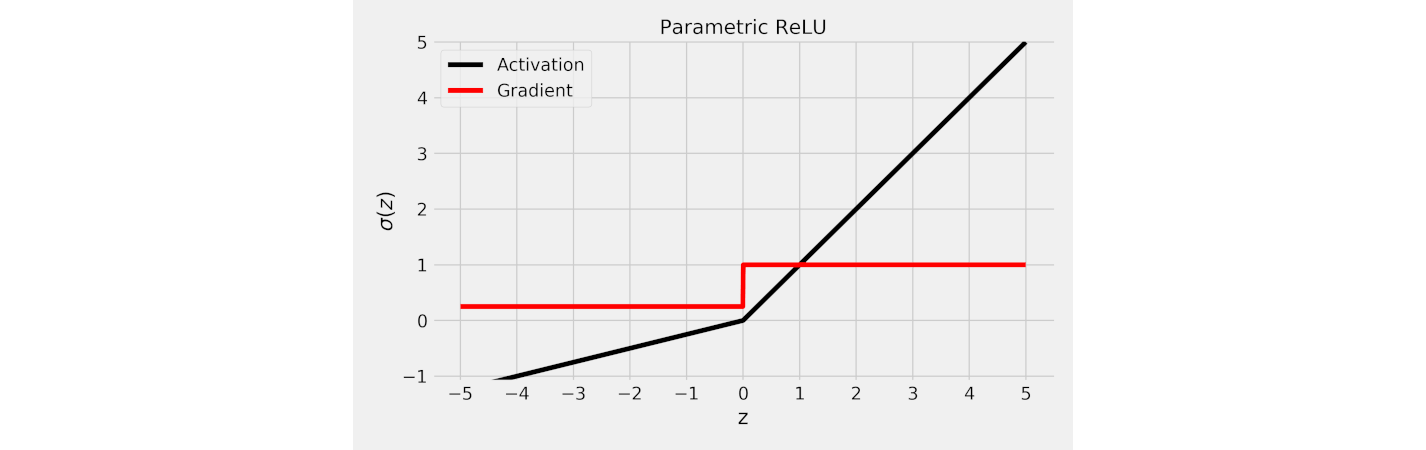

Parametric ReLU (PReLU)

Source: Chapter 4

Source: Chapter 4

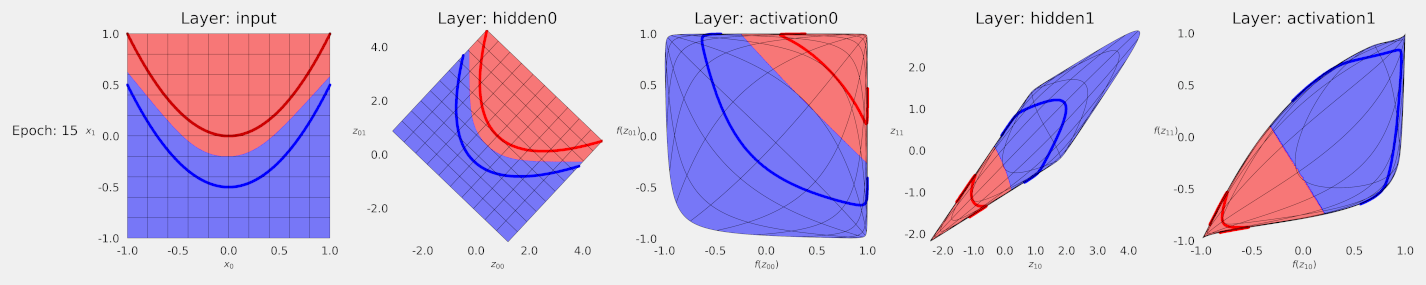

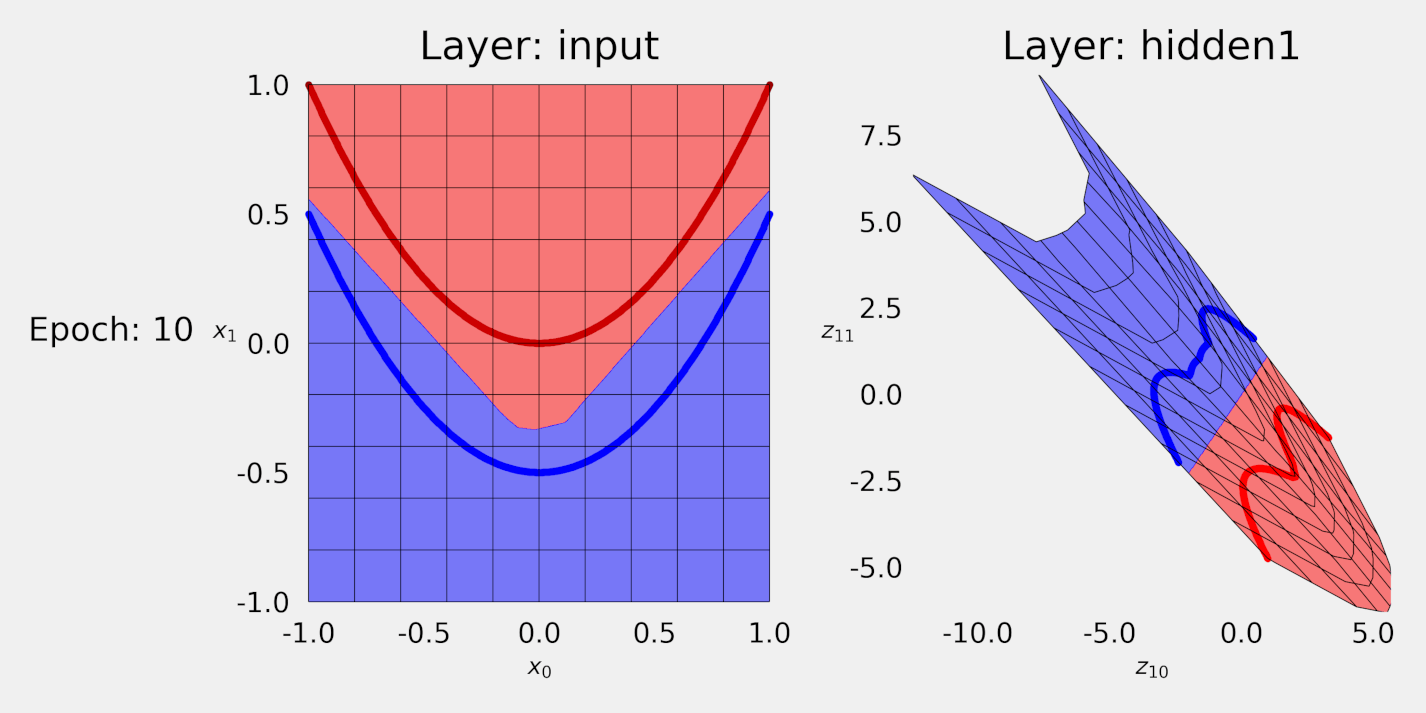

Transformed Feature Spaces

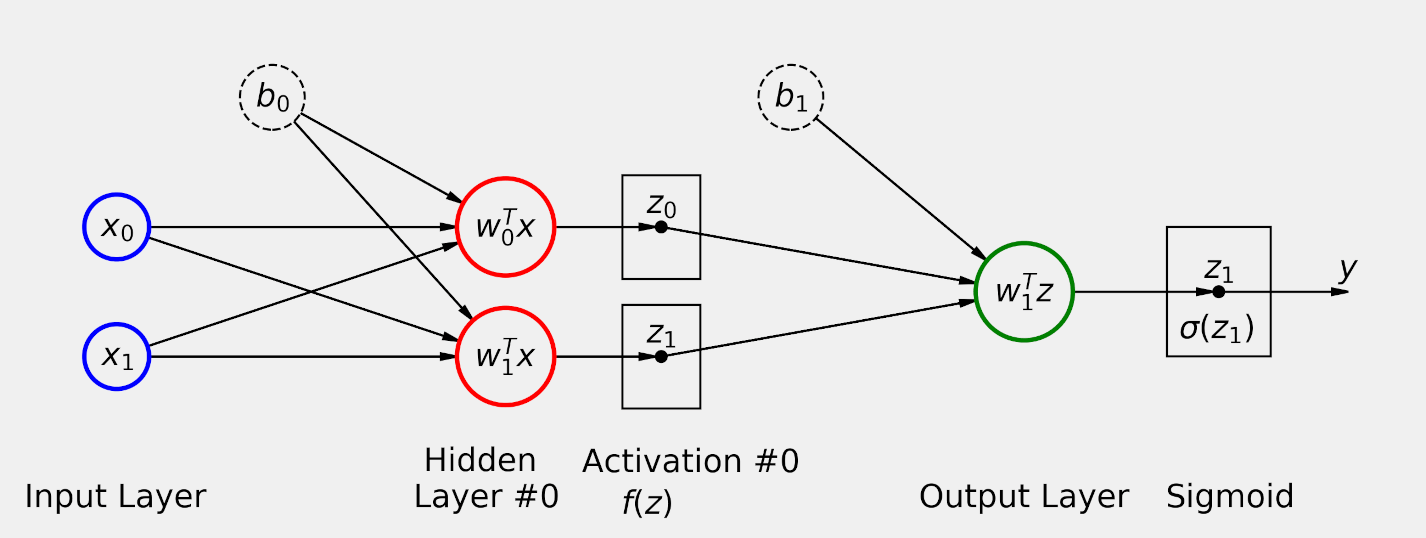

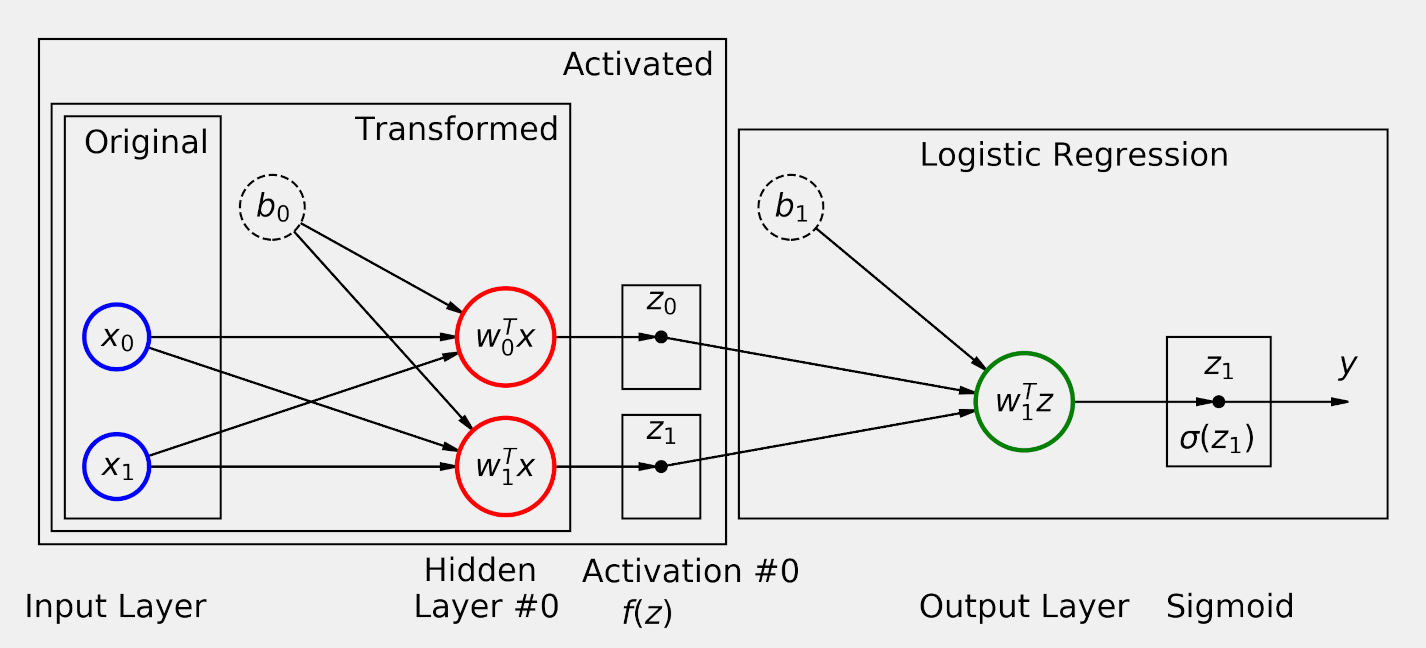

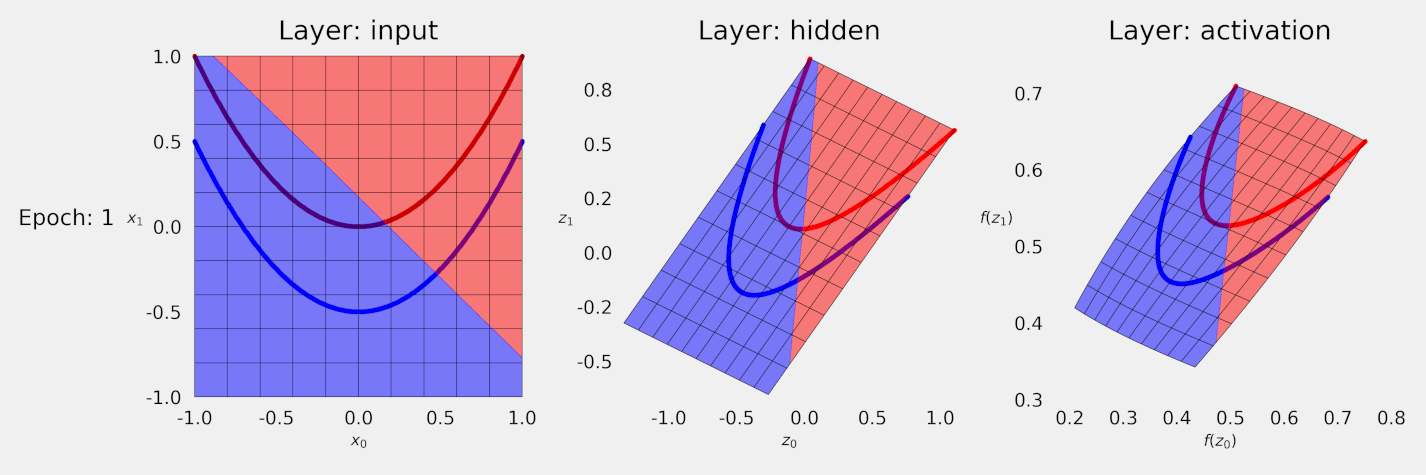

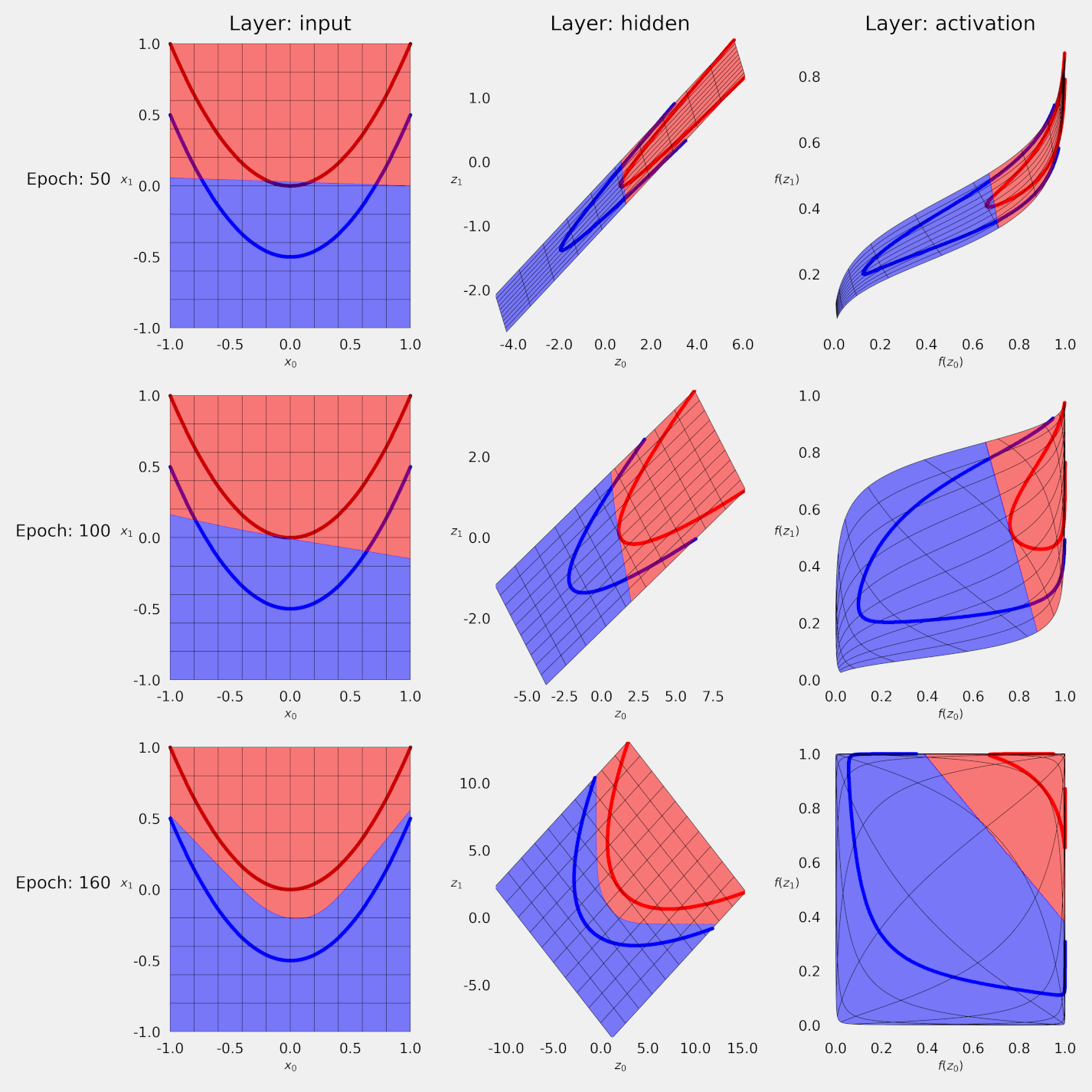

Single Hidden Layer

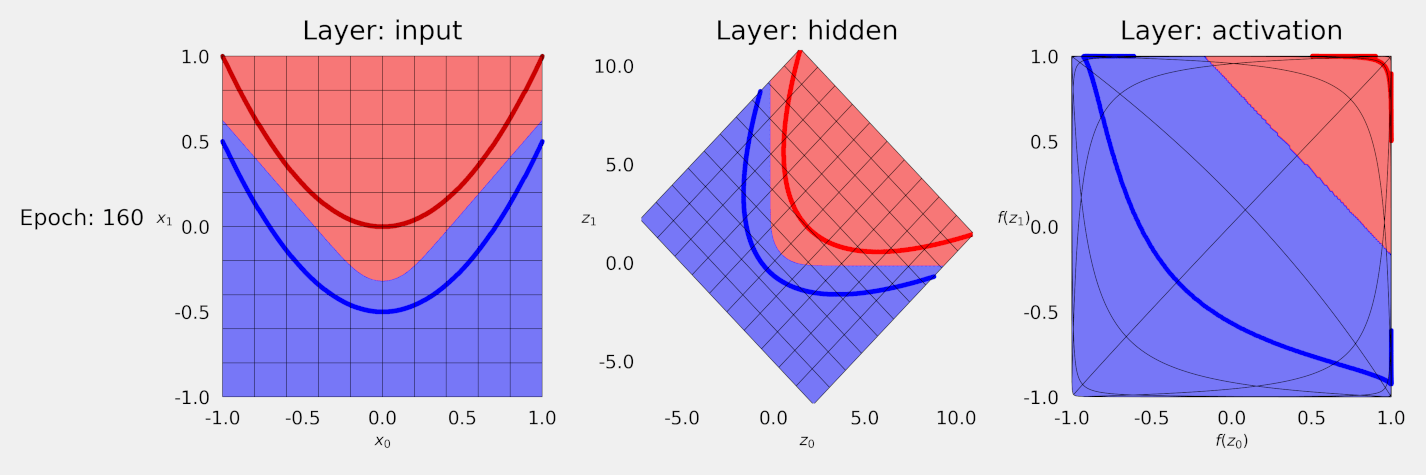

Transforming with Sigmoid

Transforming with Tanh

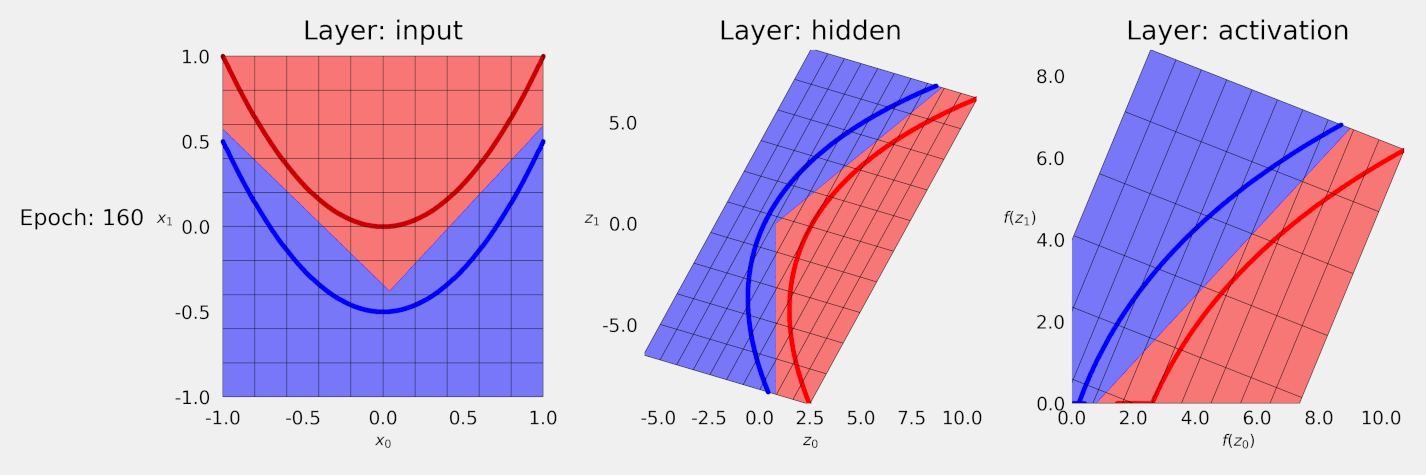

Transforming with ReLU

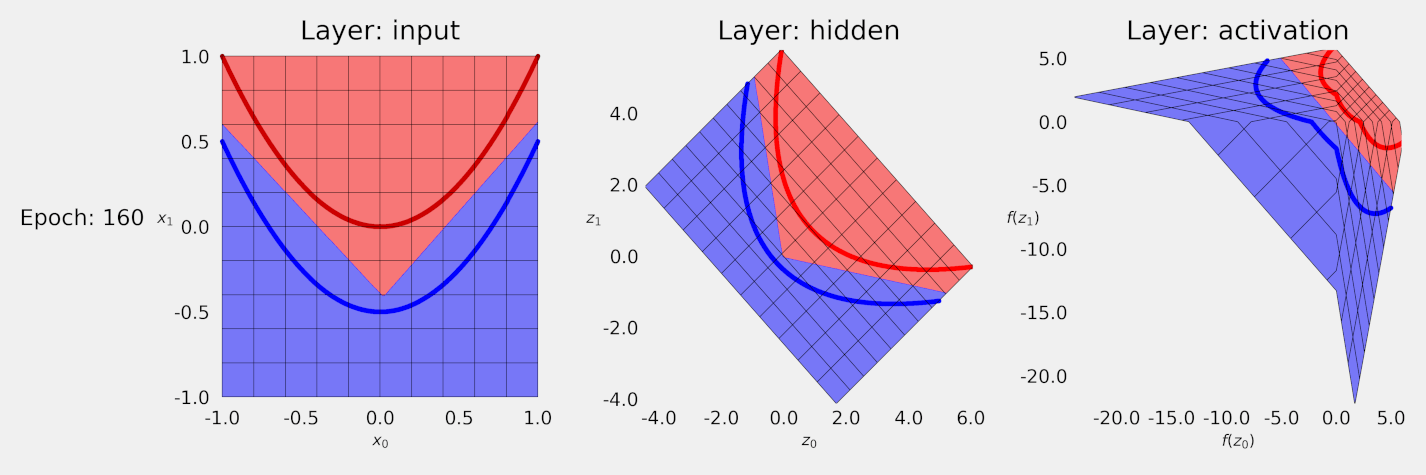

Transforming with PReLU

Two Hidden Layers

Transforming Twice with Tanh

Transforming Twice with PReLU

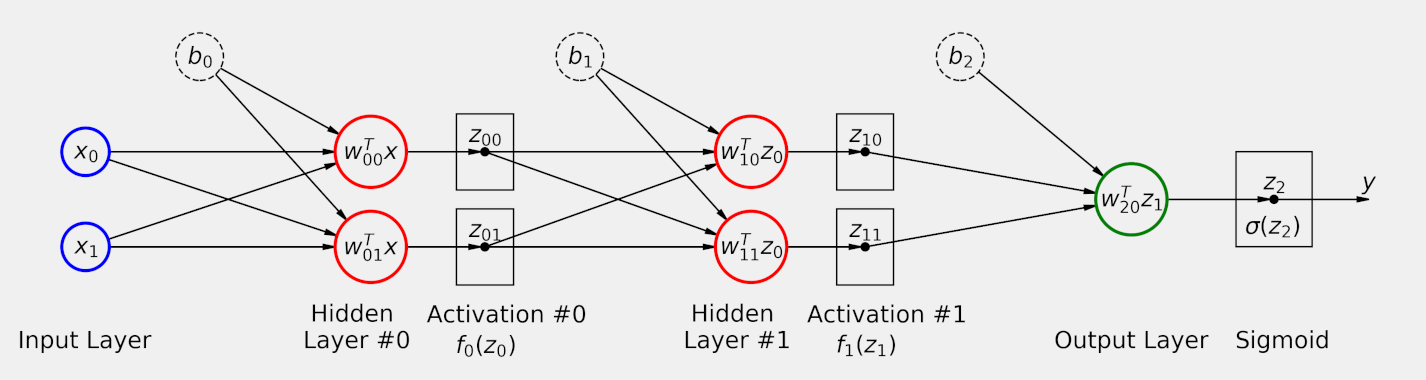

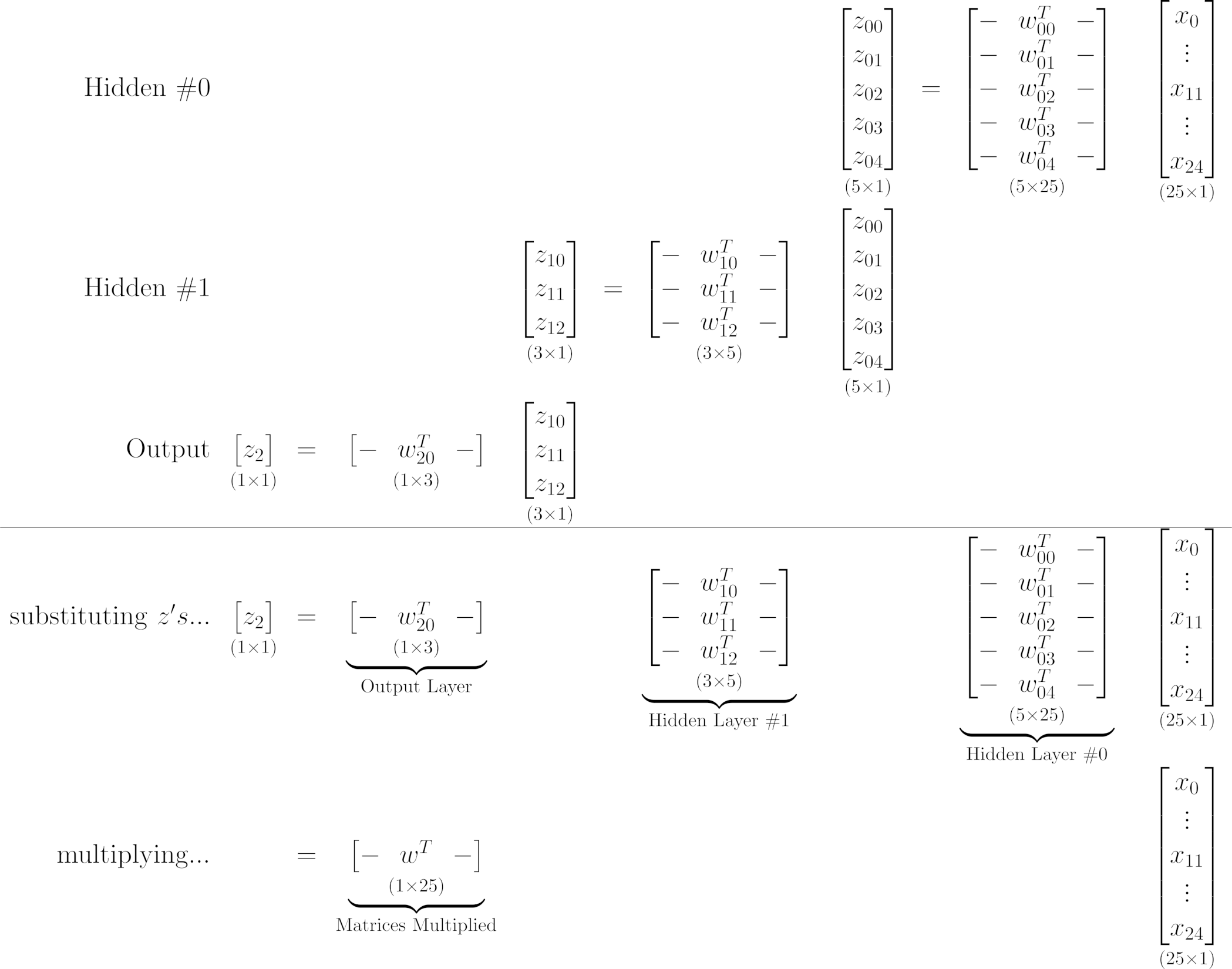

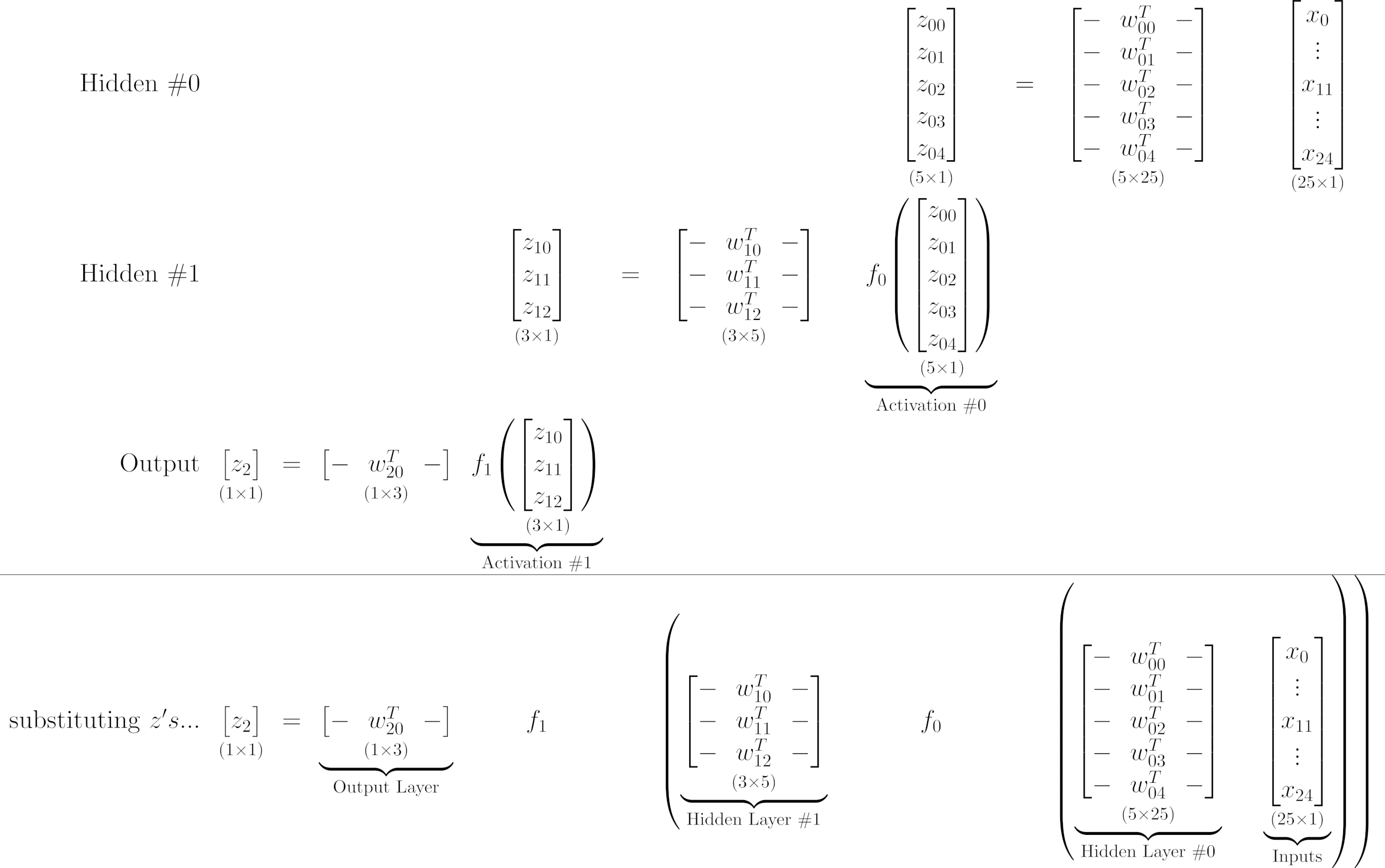

Matrix Operations

Without Activation Functions

Source: Chapter 4

Source: Chapter 4

With Activation Functions

Source: Chapter 4

Source: Chapter 4

This work is licensed under a Creative Commons Attribution 4.0 International License.