Optimizers and Schedulers

These images were originally published in the book “Deep Learning with PyTorch Step-by-Step: A Beginner’s Guide”.

They are also available at the book’s official repository: https://github.com/dvgodoy/PyTorchStepByStep.

Index

** CLICK ON THE IMAGES FOR FULL SIZE **

Stochastic Gradient Descent

Source: Chapter 6

Source: Chapter 6

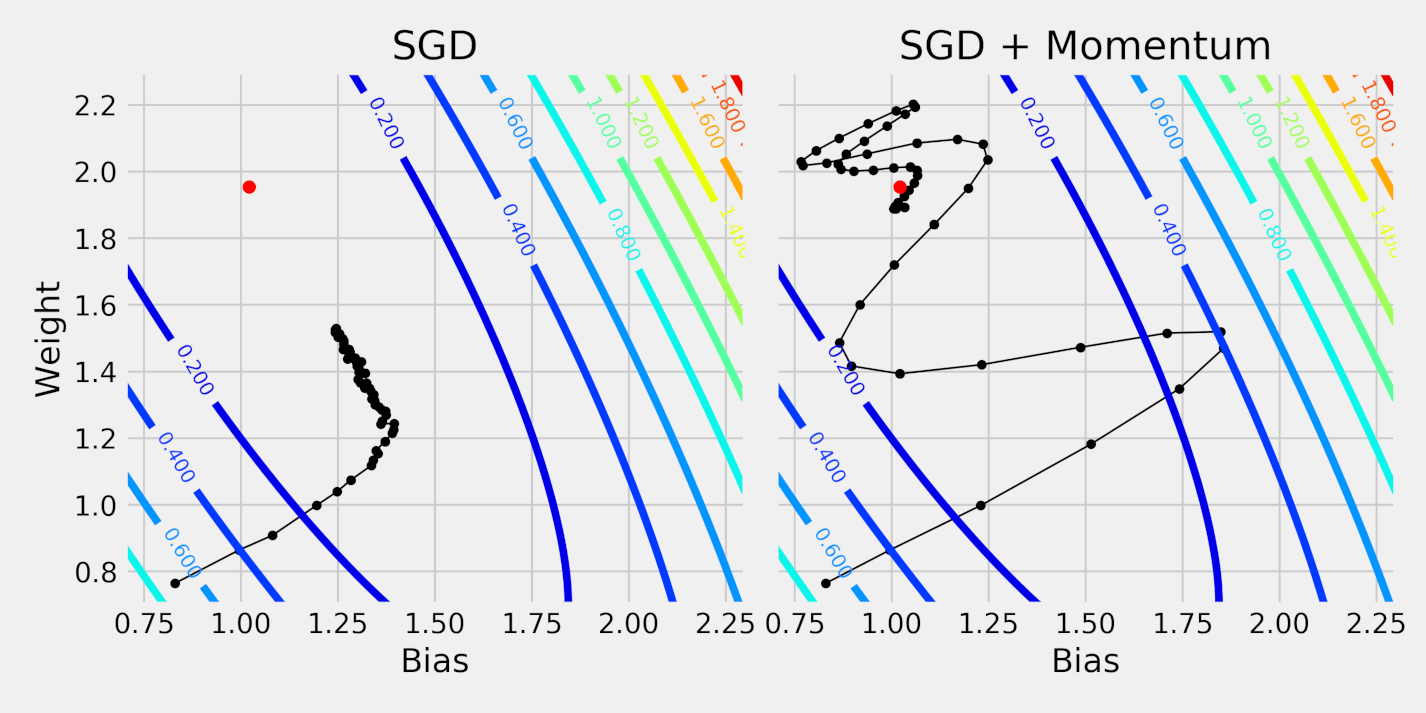

Momentum Path

Source: Chapter 6

Source: Chapter 6

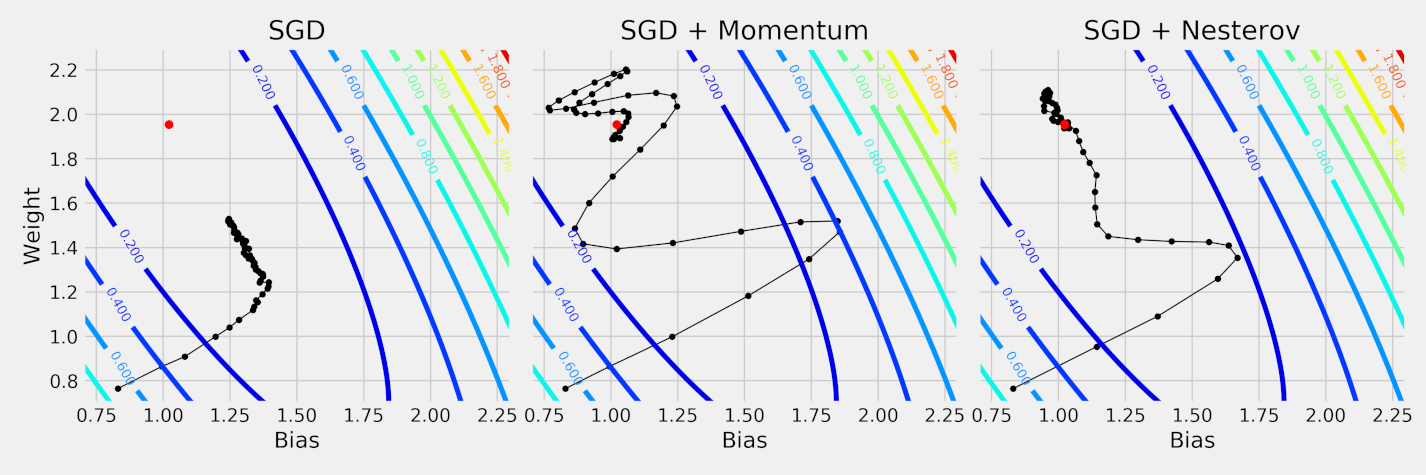

Nesterov Path

Source: Chapter 6

Source: Chapter 6

Adam

Source: Chapter 6

Source: Chapter 6

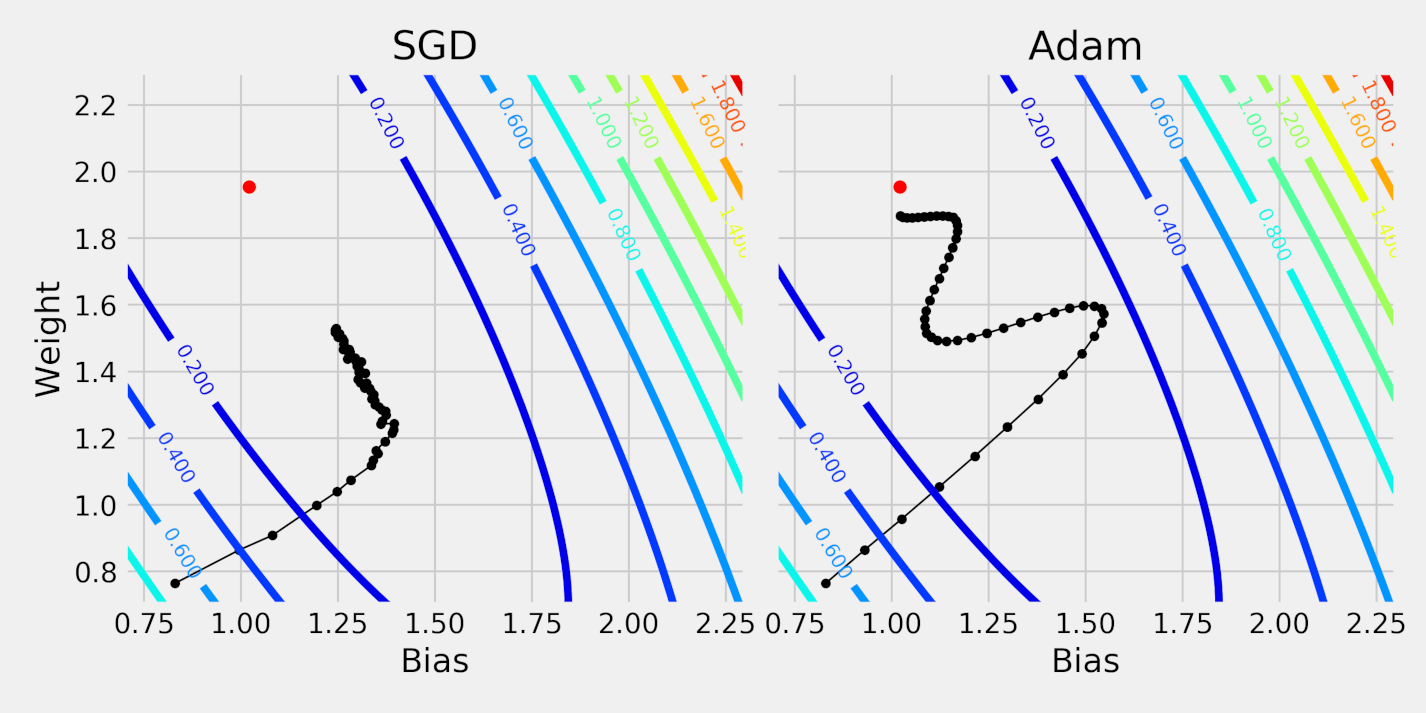

SGD vs Adam Paths

Source: Chapter 6

Source: Chapter 6

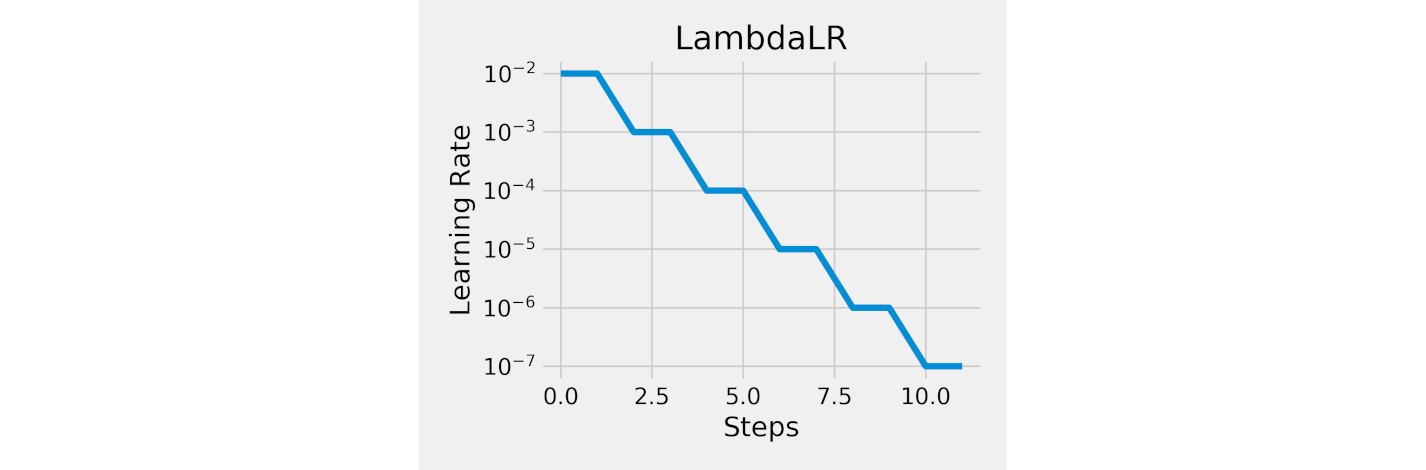

Schedulers

Step

Source: Chapter 6

Source: Chapter 6

Plateau

Source: Chapter 6

Source: Chapter 6

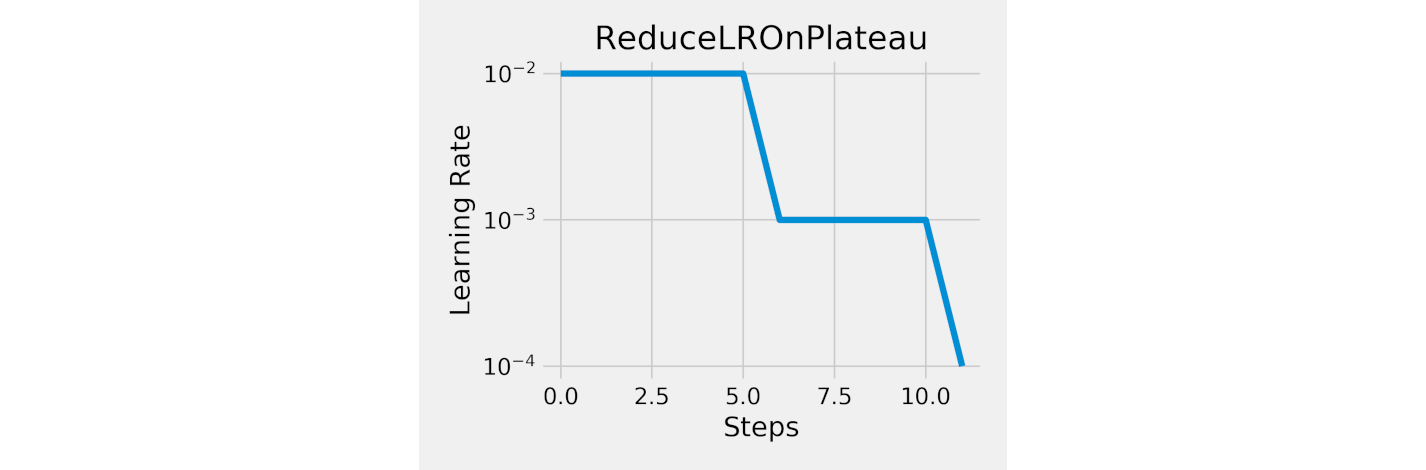

Cyclical

Source: Chapter 6

Source: Chapter 6

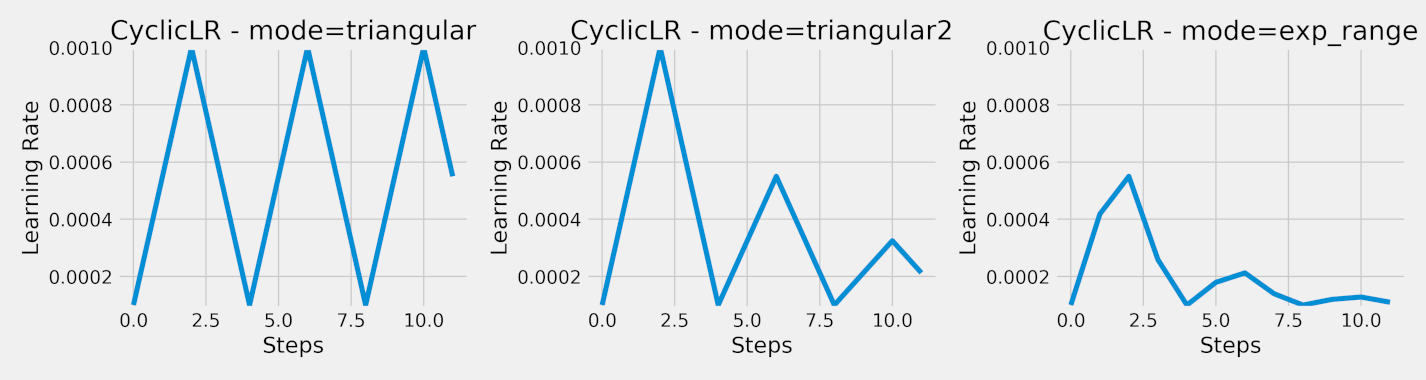

Paths

Source: Chapter 6

Source: Chapter 6

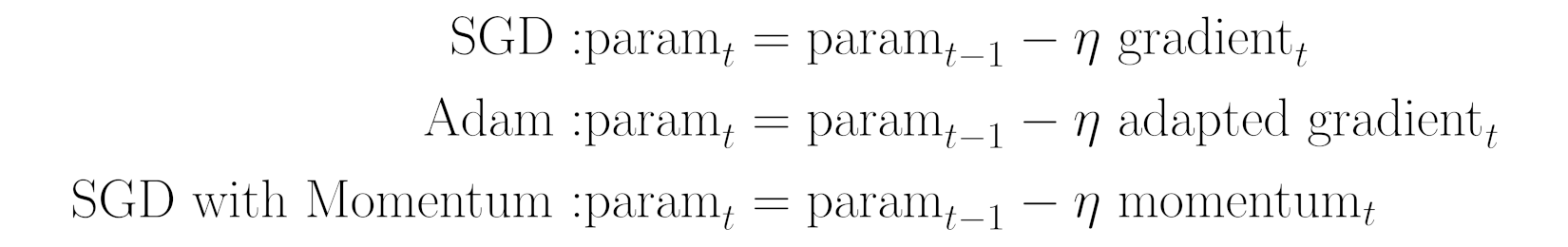

Parameter Update

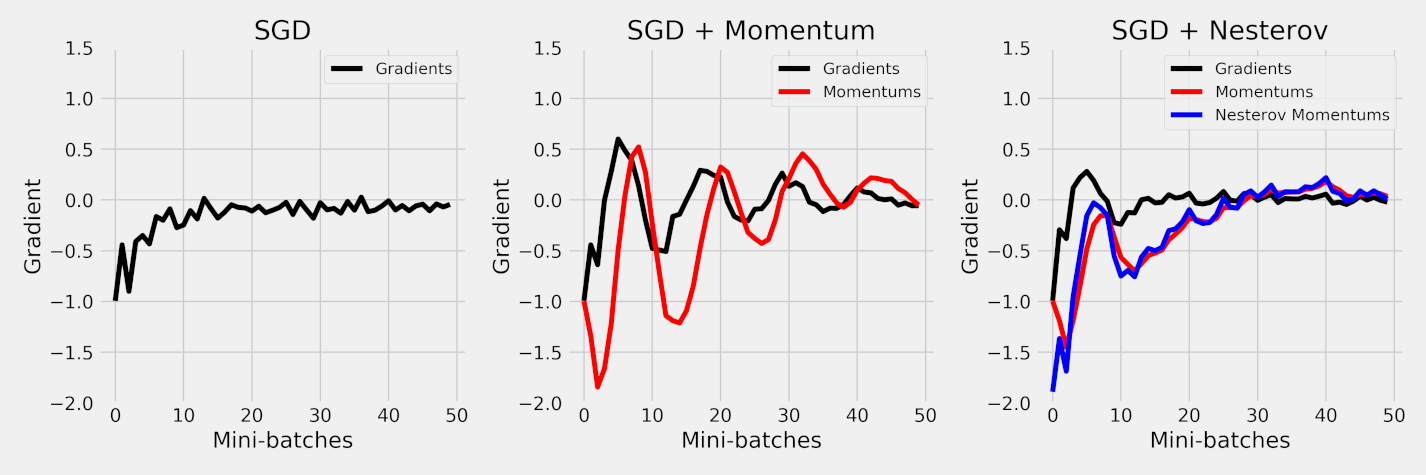

SGD vs Adam vs Momentum

Source: Chapter 6

Source: Chapter 6

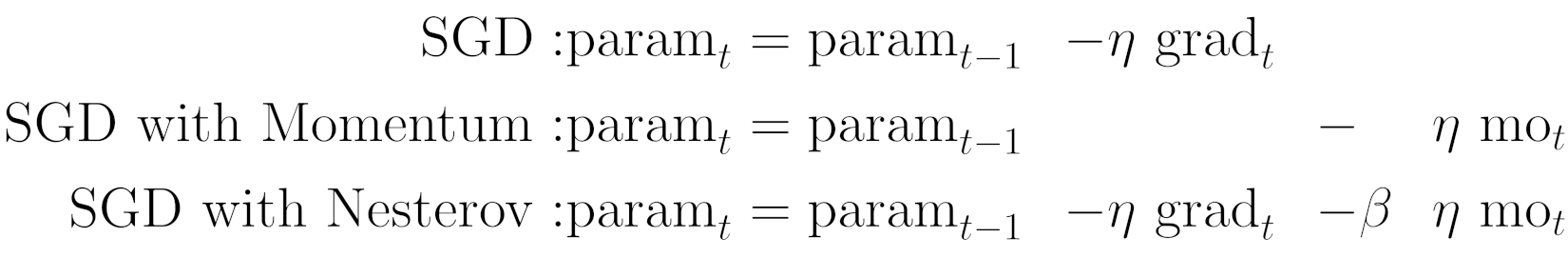

SGD vs Momentum vs Nesterov

Source: Chapter 6

Source: Chapter 6

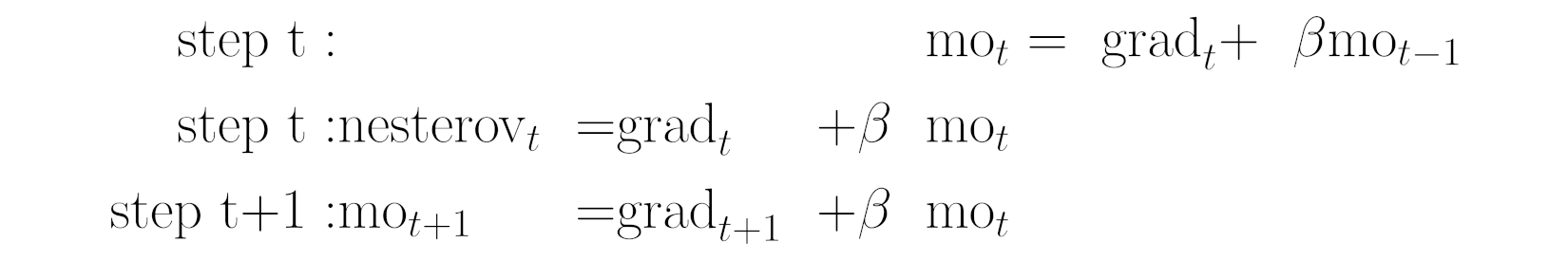

Nesterov Look-Ahead

Source: Chapter 6

Source: Chapter 6

This work is licensed under a Creative Commons Attribution 4.0 International License.